AUC Distracted Driver Dataset

Introduction

Creating a new dataset was essential to the completion of [1]’s work. The available alternatives to our dataset are: StateFarm and Southeast University (SEU) datasets. StateFarm’s dataset is to be used for their Kaggle competition purposes only (as per their regulations) [2]. As for Southeast University (SEU) dataset, it presents only four distraction postures. And, after multiple attempts to obtain it, we figured out that the authors do not make it publicly available. All the papers ([3], [4], [5], [6], [7], [8], [9]) that benchmarked against the dataset are affiliated with either Southeast University, Xi’an Jiaotong-Liverpool University, or Liverpool University, and they have at least one shared author. With that being said, the collected “distracted driver” dataset is the first publicly available for driving posture estimation research.

Camera Setup

The Distracted Driver’s dataset is collected using an ASUS ZenPhone (Model Z00UD) rear camera. The input was collected in a video format, and then, cut into individual images, each. The phone was fixed using an arm strap to the car roof handle on top of the front passenger’s seat. In our use case, this setup proved to be very flexible as we needed to collect data in different vehicles. All of the distraction activities were performed without actual driving in a parking spot.

Labeling

In order to label the collected videos, we designed a simple multi-platform action annotation tool using modern web technologies: Electron, AngularJS, and Javascript. The annotation tool is open-source and publicly available at [10].

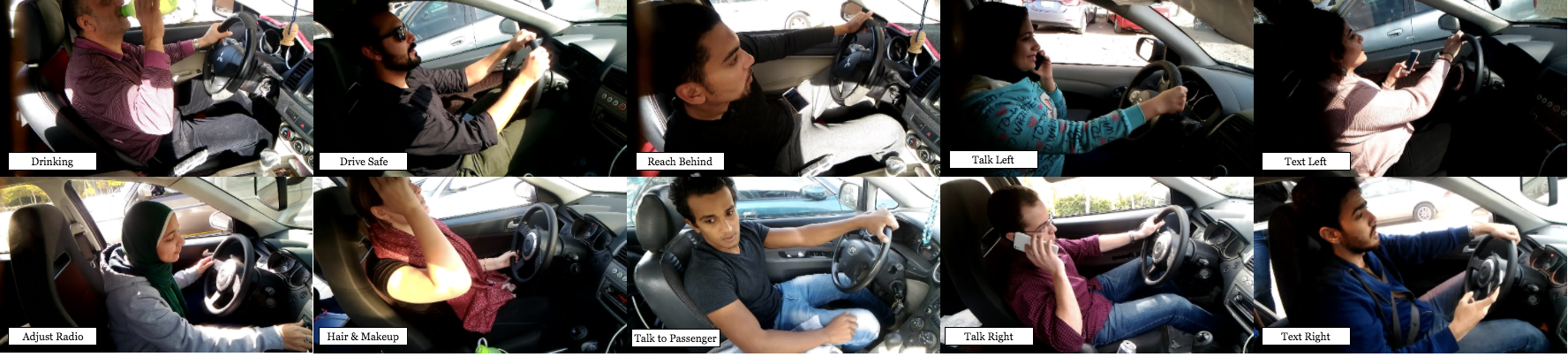

Statistics

We had 31 participants from 7 different countries: Egypt (24), Germany (2), USA (1), Canada (1), Uganda (1), Palestine (1), and Morocco (1). Out of all participants, 22 were males and 9 were females. Videos were shot in 4 different cars: Proton Gen2 (26), Mitsubishi Lancer (2), Nissan Sunny (2), and KIA Carens (1). We extracted 17,308 frames distributed over the following classes: Drive Safe (3,686), Talk Passenger (2,570), Text Right (1,974), Drink (1,612), Talk Left (1,361), Text Left (1,301), Talk Right (1,223), Adjust Radio (1,220), Hair & Makeup (1,202), and Reach Behind (1,159).

Samples

Benchmarking

In order to benchmark against our project, you need the training and testing splits:

License

- The dataset is the sole property of the Machine Intelligence group at the American University in Cairo (MI-AUC) and is protected by copyright. The dataset shall remain the exclusive property of the MI-AUC.

- The End User acquires no ownership, rights or title of any kind in all or any parts with regard to the dataset.

- Any commercial use of the dataset is strictly prohibited. Commercial use includes, but is not limited to: Testing commercial systems; Using screenshots of subjects from the dataset in advertisements, Selling data or making any commercial use of the dataset, Broadcasting data from the dataset.

- The End User shall not, without prior authorization of the MI-AUC group, transfer in any way, permanently or temporarily, distribute or broadcast all or part of the dataset to third parties.

- The End User shall send all requests for the distribution of the dataset to the MI-AUC group.

- All publications that report on research that uses the dataset should cite the following technical report:

Y. Abouelnaga, H. Eraqi, and M. Moustafa. "Real-time Distracted Driver Posture Classification". Neural Information Processing Systems (NIPS 2018), Workshop on Machine Learning for Intelligent Transportation Systems, Dec. 2018.

- This database was captured to develop the state-of-the-art in detection of distracted drivers and so it may be used freely to this purpose. Other research uses of this database are encouraged. However, the End User must first obtain prior consent from the MI-AUC group.

If you agree with the terms of the agreement contact Yehya Abouelnaga (devyhia@aucegypt.edu) to download the database. Please, sign the following document and attach it along with your request: License Agreement.

References

- [1]Y. Abouelnaga, H. M. Eraqi, and M. N. Moustafa, “Real-time Distracted Driver Posture Classification,” arXiv preprint arXiv:1706.09498, 2017.

- [2]I. Sultan, “Academic purposes?,” Kaggle Forum. 2016 [Online]. Available at: https://www.kaggle.com/c/state-farm-distracted-driver-detection/discussion/20043#114916

- [3]S. Yan, Y. Teng, J. S. Smith, and B. Zhang, “Driver behavior recognition based on deep convolutional neural networks,” 12th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), p. 636641, 2016 [Online]. Available at: http://ieeexplore.ieee.org/document/7603248/

- [4]C. Yan, F. Coenen, and B. Zhang, “Driving posture recognition by convolutional neural networks,” IET Computer Vision, vol. 10, no. 2, pp. 103–114, 2016.

- [5]C. Yan, F. Coenen, and B. Zhang, “Driving Posture Recognition by Joint Application of Motion History Image and Pyramid Histogram of Oriented Gradients,” International Journal of Vehicular Technology, vol. 2014, 2014.

- [6]C. H. Zhao, B. L. Zhang, X. Z. Zhang, S. Q. Zhao, and H. X. Li, “Recognition of driving postures by combined features and random subspace ensemble of multilayer perceptron classifiers,” Neural Computing and Applications, vol. 22, no. 1, pp. 175–184, 2013.

- [7]C. Zhao, Y. Gao, J. He, and J. Lian, “Recognition of driving postures by multiwavelet transform and multilayer perceptron classifier,” Engineering Applications of Artificial Intelligence, vol. 25, no. 8, pp. 1677–1686, 2012.

- [8]C. Zhao, B. Zhang, J. Lian, J. He, T. Lin, and X. Zhang, “Classification of driving postures by support vector machines,” Proceedings - 6th International Conference on Image and Graphics, ICIG 2011, no. June 2014, pp. 926–930, 2011.

- [9]C. H. Zhao, B. L. Zhang, J. He, and J. Lian, “Recognition of driving postures by contourlet transform and random forests,” IET Intelligent Transport Systems, vol. 6, no. 2, pp. 161–168, 2011.

- [10]Y. Abouelnaga, “Action Annotation Tool.” Github, Cairo, 2017 [Online]. Available at: https://github.com/devyhia/action-annotation